At GE HealthCare, we understand that AI systems should be used as tools to serve people, respect human dignity, and uphold personal autonomy while functioning in ways that can be appropriately controlled and overseen by humans. This calls for a multifaceted approach, because AI is not a single technology but rather a collection of diverse technologies, each with specific capabilities. For this reason, we are committed to helping enable that proper human oversight mechanisms are in place, tailored to the specific context of use and in compliance with applicable laws.

Trust is at the heart of everything we do at GE HealthCare. For more than 125 years, we have developed leading medical devices, patient care solutions, and pharmaceutical services, earning the confidence of healthcare providers and patients worldwide. With an installed base of over 5 million units, spanning X-ray, PET, Ultrasound, and ECG, our technologies support more than 1 billion patients annually, providing critical data and insights that drive quality care and life-saving decisions.

That is why we developed our Responsible AI Principles. These principles inform every stage of our product development, helping AI serve as a trusted partner in healthcare.

In this article, I will first outline our Principles for Responsible AI. Then, I will demonstrate how we bring these principles to life in tangible and impactful ways in the real world.

Our 7 Principles for Responsible AI

Our Responsible AI Principles are designed so that every AI system we engage or develop meets the highest standards of performance, safety, and accountability. These principles include the following key areas:

-

Safety: GE HealthCare seeks to protect against harm to human life, health, property, or the environment associated with unintended applications or access to AI Systems at the organization. GE HealthCare is also committed to developing and using AI Systems in a sustainable and environmental manner that embraces opportunities to promote socially conscious use of AI in alignment with the GE HealthCare ESG goals.

-

Validity and Reliability: We develop and deploy AI systems to consistently provide accurate outputs or behave within a defined range of acceptability when subject to expected conditions of use, helping provide reliability.

-

Security and Resiliency: We design AI systems to help withstand unexpected adverse events or unexpected changes in their environment or use, maintaining confidentiality, integrity, and availability in the event of adversarial or unauthorized actions.

-

Accountability and Transparency: We help facilitate the delivery of meaningful and timely information about every AI system to relevant stakeholders, tailored to the expected knowledge and accessibility needs of each audience. An accountability structure governs each decision made related to an AI system.

-

Explainability and Interpretability: We design and document AI systems to allow for traceability and explainability so that they are able to answer, when needed, how and why a decision was made.

-

Privacy-Enhanced: We develop AI systems that safeguard privacy, with an objective of using best practices to maintain human autonomy, identity, and dignity.

-

Fairness with Harmful Bias Managed: GE HealthCare aims to develop and use AI Systems in a way that encourages fairness and increases access to care, while avoiding unlawful discrimination and appropriately mitigating unfair biases.

From Theory to Action

Let’s cover a few ways these principles can be put into action.

Hallucinations

Hallucinations in AI occur when a model generates false or misleading information that seems plausible but is not based on reality. These errors can be dangerous in healthcare, where inaccurate outputs may lead to incorrect diagnoses or treatments.

Prompt engineering helps mitigate hallucinations by refining how models generate responses. Clear prompts with sufficient context help improve accuracy. For example, structured inputs like medical history, symptoms, and imaging data guide the model’s output more effectively than open-ended prompts.

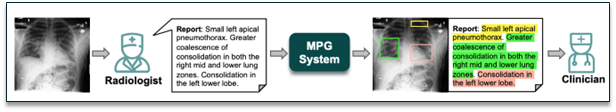

Retrieve-Augment-Generate (RAG) techniques reduce hallucinations by grounding responses in retrieved factual data. Visual grounding can further enhance accuracy by linking language descriptions to specific regions in medical images. For instance, a Medical Report Grounding (MRG) system can highlight areas of concern, such as lung consolidation in an X-ray, aiding radiologists confirm the diagnosis based on the relevant region in the scan.

Explainability techniques and ontology-based reasoning can also enhance reliability. Ontology frameworks cross-check symptoms with known disease classifications to help prevent errors, while LLM temperature control mechanisms help control for output stability and reproducibility.

Visual grounding to link language descriptions to specific regions in images

Responsible AI in Design

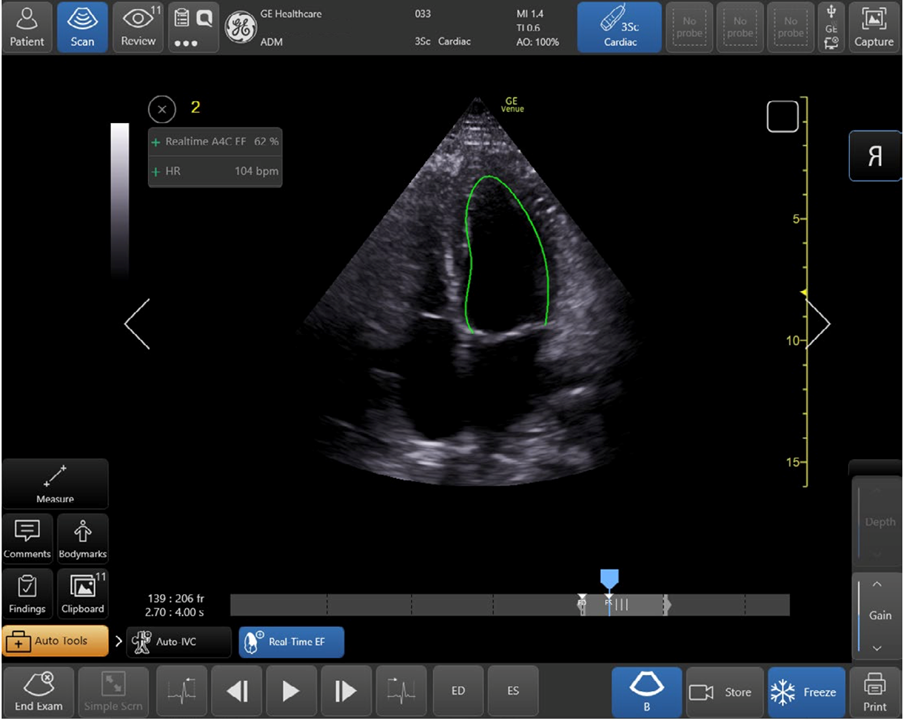

Our Responsible AI principles drive the design of tools with an aim of enhancing safety, transparency, and clinical efficacy. The Real-Time Ejection Fraction (EF) tool currently in development exemplifies this approach, streamlining left ventricular (LV) function assessment using point-of-care ultrasound (POCUS). LV function is vital for evaluating heart health. The tool provides rapid, semi-automated EF measurements with a multicolor Quality Indicator (green, yellow, red) to assess scan quality and consistency in real-time.

Real-time Ejection Fraction tool in action

Traditionally, EF calculation required manual imaging and measurements, taking 10–15 minutes and relying on specialized expertise. Our testing shows that this tool delivers faster results which can help improve speed and accuracy.

The tool demonstrates Responsible AI by helping facilitate transparency through a color-coded quality system and allowing users to review and select optimal data. It is designed to enhance safety with guidance during suboptimal scans and maintains accountability through manual confirmation and traceability of selected frames.

By improving accessibility and reducing variability in results, the tool is designed to promote accuracy. Validated against expert measurements, it helps facilitate reliability, supporting timely diagnoses and increased access to advanced cardiac care.

Defining Intended Use Cases

Intended use cases define the specific applications for which an AI system is designed, helping it meet safety, performance, and regulatory requirements. This is particularly important for generalized technologies like foundation models, which have broad capabilities but must be tailored to specific tasks for reliable deployment.

By clearly defining intended use cases, we help maintain control over performance, support compliance, and mitigate risks. This approach is meant to help us unlock the full potential of foundation models.

I'll cover other ways we can help facilitate the responsible rollout of AI in future discussions. For now, I hope the examples provided illustrate how Responsible AI is not just a principle at GE HealthCare— it is embedded into our product development processes, guiding our approach to innovation and patient care.